MAST

MAST

“MAST” stands for the Multimodal Annotation Software Tool, a program we have built to help researchers analyze the properties of media with visuals and/or text. MAST was first conceived by Neil Cohn as part of the TINTIN Project, and was designed and programmed by Bruno Cardoso.

MAST can be used to annotate the properties of any media that uses static visuals, with or without text. You can also create Teams of users to collaboratively work on projects with lots of people, share documents, or explore theories through annotations with your own schemas or those made public by other researchers.

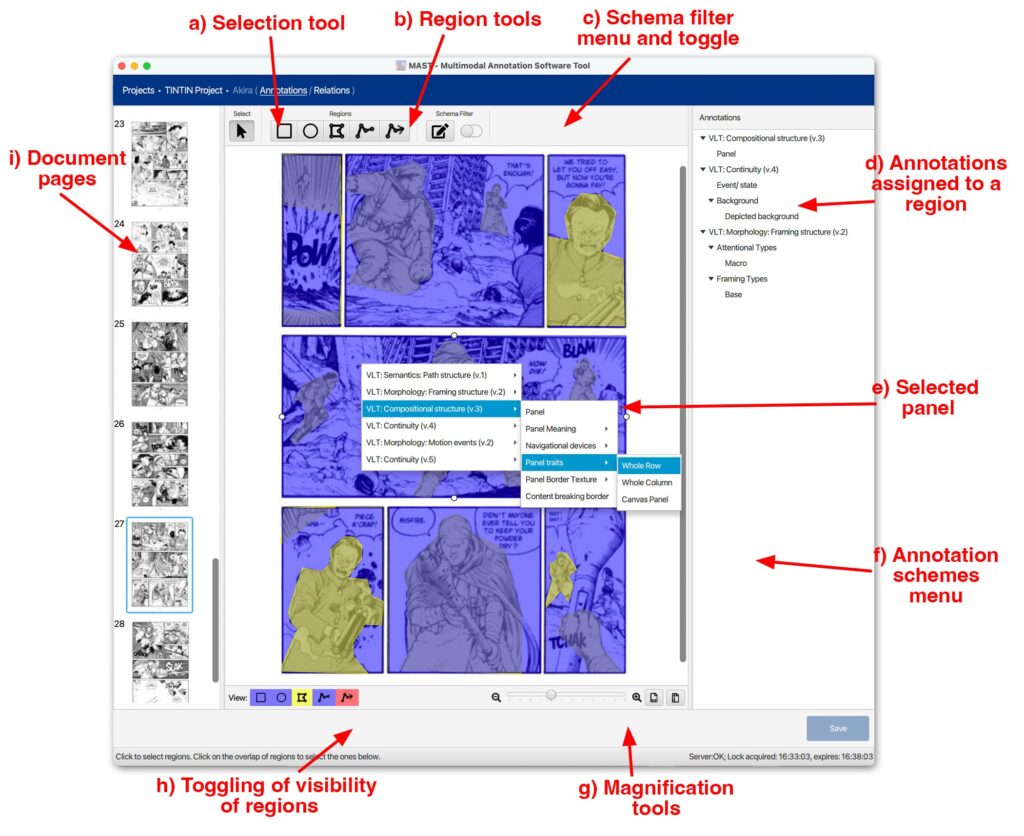

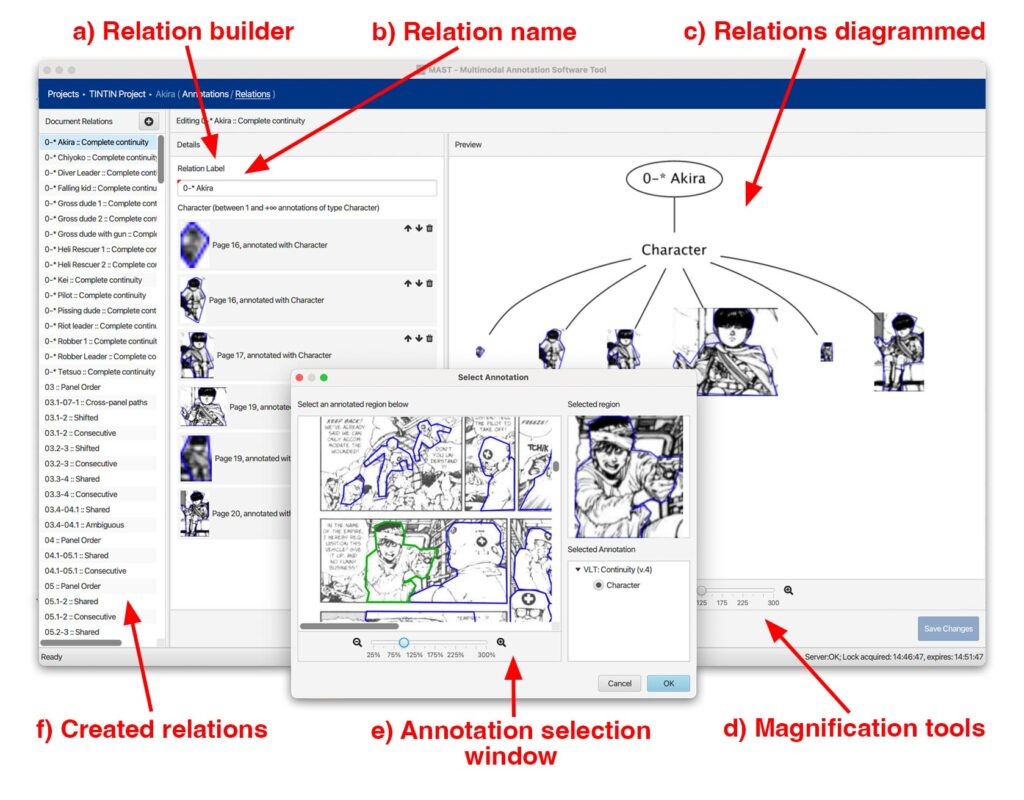

Within MAST’s Annotation Editor, users can annotate documents by drawing flexibly shaped regions around areas of interest in a document, and then annotating them with schemas defined by users. Multiple annotations can be applied to each region, and in a separate Relations Editor, annotations can be associated into hierarchic dependencies or groupings.

Want to read more about MAST? Check out blog posts with updates and insights.

MAST provides a powerful, flexible, and collaborative interface for empirical research on multimodal documents.

MAST runs on a Java Virtual Machine, meaning that it can run on any operating system so long as Java is installed (see our installation instructions). To download the latest version of MAST, click the link below. We recommend always keeping MAST up to date, so if you are using it please watch this space for updates or request to be added to our email list.

Interested in using MAST? Please email Neil Cohn.

If you use MAST and report the data in a paper or other publication, please cite this paper:

Cardoso, Bruno, and Neil Cohn. 2022. The Multimodal Annotation Software Tool (MAST). In Proceedings of the 13th Language Resources and Evaluation Conference, 6822‑6828. Marseille, France: European Language Resources Association.

MAST Resources: Annotation Schemas

Within MAST you can create your own annotation schemas to analyze visual and multimodal documents. You can choose to keep your annotation schemas private to your own research, or you can choose to publish them within MAST to make them available for other researchers to use.

As part of our research, we have made several schemas available for annotation of constructs from Visual Language Theory (VLT). You can find these schemas within MAST, but you can learn more about them through these downloadable annotation guides (pdfs). If you annotate with these schemas or analyze data using them, we ask that you cite these documents (date indicates last update):

VLT: Compositional Structure v.2 (3/22)

VLT: Compositional Structure: Internal v.2 (3/22)

VLT: Semantics: Continuity v.5 (3/22)

VLT: Semantics: Motion Events v. (3/22)

VLT: Semantics: Conceptual Structure v. (3/22)

VLT: Morphology: Framing v.2 (3/22)

VLT: Morphology: Carriers v.1 (3/22)

VLT: Morphology: Motion Events v. (3/22)

VLT: Morphology: Perspective Taking v. (3/22)

VLT: Morphology: Gesture v. (3/22)

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 850975).