Collaboration

My goal has always been to build a community of researchers interested in examining the structure and cognition of visual language. For those interested in doing research on visual language generally, this site has several resources providing advice and pointing towards research materials.

If you are currently a student in the Netherlands and interested in helping with research, please contact me directly.

I am always interested in pursing research with interested and motivated collaborators. If you are interested in doing visual language research and would like me to collaborate, please contact me. Below are our collaborators on a variety of different projects. Additional collaborators are also associated with the TINTIN Project specifically.

Ongoing research collaborations…

Annika Andersson (Linnaeus University) collaborates on a project investigating the neurocognition of children’s processing of visual narratives and language, particularly children with developmental language disorder.

Emily Coderre (University of Vermont) collaborates on research related to the comprehension of visual narratives and language in Autism Spectrum Disorder and visual narrative fluency. We have studied predictive processing along with Trevor Brothers (Tufts University), and are studying narrative comprehension with Emily Zane (James Madison University).

Tom Foulsham (University of Essex) collaborates on projects related to the intersection of attention and visual narratives, using both eye tracking and online measures.

Joseph Magliano (Georgia State University) researches discourse and education, and collaborates on projects related to multimodal narrative comprehension, along with John Hutson, Daniel Feller, and Lester Loschky (Kansas State University).

Mirella Manfredi (University of Zurich) collaborates on projects related to the development of semantic knowledge across and between modalities.

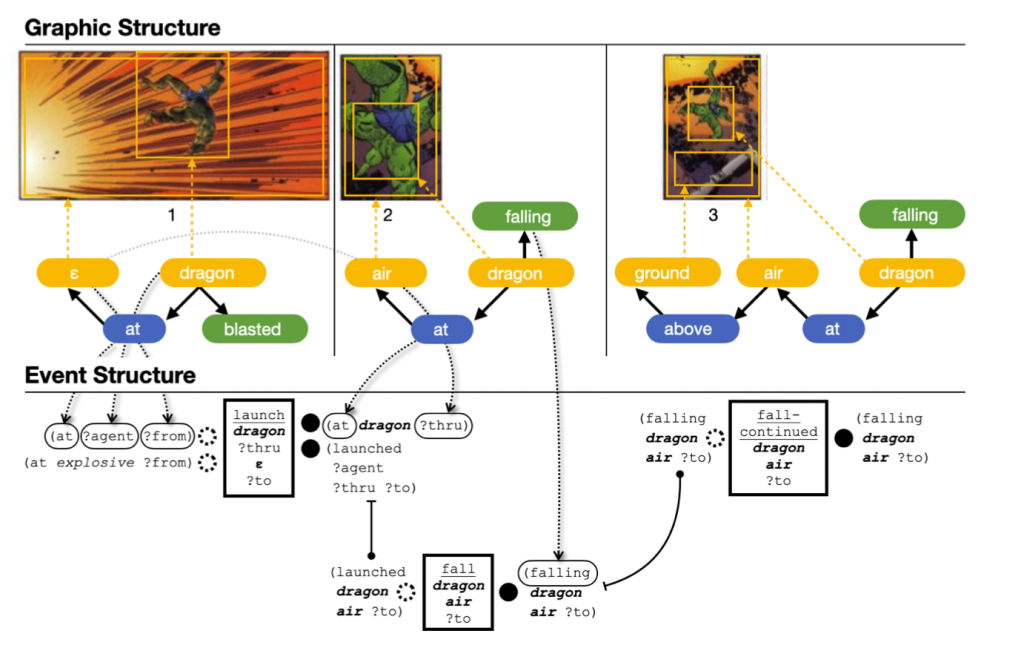

Chris Martens (North Caroline State University) and Rogelio E. Cardona-Rivera (University of Utah) are working on implementing a computational model of visual narrative comprehension and generation.

Ben Weissman (Rensselaer Polytechnic Institute) collaborates on projects related to emoji, specifically emoji vocabulary and grammar.

Yen Na Yum (Hong Kong Institute of Education) collaborates on projects investigaing the effectiveness of multimodal narratives for education, particularly second language learning.